The Evolution of AI Chatbots: From Concept to Reality

Published on 10 June 2024

Last updated on 26 July 2025

The Evolution of AI Chatbots: From Turing's Vision to Modern Conversational Intelligence

The remarkable journey of AI chatbots represents one of the most fascinating chapters in the history of artificial intelligence. From the theoretical foundations laid by pioneering computer scientists to today's sophisticated conversational agents, the evolution of chatbot technology reflects humanity's persistent quest to create machines capable of meaningful dialogue. This comprehensive exploration traces the development of AI chatbots through their most significant milestones, examining how each breakthrough has contributed to the advanced systems we interact with today.

The Foundational Vision: Alan Turing and the Birth of Machine Intelligence

The story of AI chatbots begins in 1950 with the groundbreaking work of Alan Turing, the brilliant British mathematician and logician whose contributions to computer science continue to influence AI development today. Turing's seminal paper, "Computing Machinery and Intelligence," introduced what would become known as the Turing Test - a deceptively simple yet profound criterion for evaluating machine intelligence.

The Turing Test proposed a thought experiment: if a human evaluator, engaged in natural language conversation with both a human and a machine, could not reliably distinguish between the two based solely on their responses, then the machine could be considered to exhibit intelligent behaviour. This concept was revolutionary because it shifted the focus from internal mechanisms to observable behaviour, establishing conversation as the ultimate benchmark for artificial intelligence.

Turing's vision was remarkably prescient. He anticipated that by the year 2000, machines would be capable of engaging in conversation so convincingly that an average interrogator would have no more than a 70% chance of correctly identifying the machine after five minutes of questioning. Whilst this specific prediction proved overly optimistic in its timeline, Turing's fundamental insight - that conversation represents the essence of intelligence - has proven remarkably enduring.

The Turing Test established several crucial principles that continue to guide AI development:

Natural Language as Intelligence Indicator: Turing recognised that the ability to engage in sophisticated natural language conversation represents one of the highest forms of intelligence, requiring understanding, reasoning, creativity, and contextual awareness.

Behavioural Assessment: Rather than focusing on how machines think, Turing emphasised what they could accomplish, establishing a pragmatic approach to evaluating AI capabilities.

Human-Centric Evaluation: By using human judgement as the standard, Turing ensured that AI development would remain focused on creating systems that could meaningfully interact with people.

Turing's work provided the theoretical foundation for decades of AI research and established the conversational challenge that would drive chatbot development for generations to come.

ELIZA: The Dawn of Conversational Computing

The transition from theoretical possibility to practical reality began in the mid-1960s with the creation of ELIZA, developed by Joseph Weizenbaum at the Massachusetts Institute of Technology. ELIZA represented a watershed moment in computing history - the first program capable of engaging in seemingly meaningful conversation with human users.

Weizenbaum designed ELIZA using a relatively simple but ingenious approach based on pattern matching and substitution. The program would scan user input for specific keywords and patterns, then respond using pre-programmed templates that transformed statements into questions or reflective responses. The most famous implementation, known as DOCTOR, simulated a Rogerian psychotherapist - a choice that proved remarkably effective because this therapeutic approach emphasises reflective listening and open-ended questions.

A typical ELIZA conversation might proceed as follows:

User: "I am feeling depressed about my work" ELIZA: "Why do you think you are feeling depressed about your work?"

User: "My boss doesn't appreciate my efforts" ELIZA: "Tell me more about your boss"

Despite its simplicity, ELIZA's impact was profound and unexpected. Users frequently attributed human-like understanding and empathy to the program, even when they knew it was artificial. This phenomenon, later termed the "ELIZA effect," revealed something fundamental about human psychology - our tendency to anthropomorphise systems that exhibit even rudimentary conversational abilities.

Weizenbaum himself was surprised and somewhat disturbed by users' emotional responses to ELIZA. He observed people forming attachments to the program and confiding personal information as if speaking to a real therapist. This reaction highlighted both the potential and the ethical challenges of conversational AI - themes that remain relevant today.

ELIZA's significance extends beyond its technical achievements:

Proof of Concept: ELIZA demonstrated that computers could engage in conversation that felt meaningful to users, validating the pursuit of conversational AI.

Pattern Recognition: The program established pattern matching as a fundamental technique in natural language processing, influencing countless subsequent developments.

Human-Computer Interaction: ELIZA showed that the interface between humans and computers could be natural and intuitive, paving the way for more user-friendly computing.

Ethical Awareness: Weizenbaum's observations about users' reactions to ELIZA sparked important discussions about the responsibility of AI developers and the potential for deception in human-computer interaction.

The Evolution of Natural Language Processing

Following ELIZA's success, the 1970s and 1980s witnessed significant advances in natural language processing as researchers sought to overcome the limitations of simple pattern matching. This period saw the development of more sophisticated approaches to understanding and generating human language.

Rule-Based Systems

The next generation of chatbots relied on rule-based systems that attempted to codify the complexities of human language through comprehensive sets of grammatical and semantic rules. These systems, whilst more sophisticated than ELIZA, faced the enormous challenge of capturing the nuances and exceptions that characterise natural language.

Programs like PARRY, developed by psychiatrist Kenneth Colby, simulated paranoid behaviour through elaborate rule sets that governed responses based on the program's simulated emotional state. PARRY was notable for being one of the first chatbots to pass informal versions of the Turing Test when evaluated by psychiatrists who couldn't reliably distinguish its responses from those of actual paranoid patients.

Knowledge-Based Approaches

The 1980s saw the emergence of knowledge-based systems that attempted to give chatbots access to structured information about the world. These systems used formal logic and knowledge representation to reason about user queries and generate more informed responses.

However, the "knowledge acquisition bottleneck" - the difficulty of encoding comprehensive world knowledge into computer systems - proved to be a significant challenge. Researchers discovered that even simple conversations required vast amounts of background knowledge that humans take for granted.

Statistical Methods

The 1990s marked a turning point with the introduction of statistical approaches to natural language processing. Rather than relying solely on hand-crafted rules, these methods used large corpora of text to learn patterns and probabilities in language use.

Statistical methods offered several advantages:

Data-Driven Learning: Systems could learn from examples rather than requiring explicit programming of every linguistic rule.

Handling Ambiguity: Statistical models could manage the inherent ambiguity of natural language by considering multiple possible interpretations and selecting the most probable.

Scalability: As computing power increased and text corpora grew larger, statistical methods could continuously improve their performance.

This shift towards data-driven approaches laid the groundwork for the machine learning revolution that would transform AI chatbots in the following decades.

The Machine Learning Revolution

The late 1990s and early 2000s brought about a fundamental transformation in AI chatbot development with the widespread adoption of machine learning techniques. This shift represented a move away from explicitly programmed behaviours towards systems that could learn and improve from experience.

Supervised Learning

Supervised learning techniques allowed chatbots to be trained on large datasets of conversation examples, learning to map inputs to appropriate outputs. This approach enabled more natural and contextually appropriate responses than rule-based systems could achieve.

The availability of large conversational datasets, including chat logs, customer service interactions, and online forums, provided the training material necessary for these systems to learn the patterns and conventions of human conversation.

Unsupervised Learning

Unsupervised learning techniques enabled chatbots to discover patterns in language without explicit training examples. These methods could identify topics, extract key concepts, and understand the structure of conversations without human annotation.

Reinforcement Learning

Reinforcement learning introduced the concept of learning through interaction and feedback. Chatbots could improve their performance by receiving rewards for successful interactions and penalties for failures, gradually developing more effective conversational strategies.

This period also saw the development of more sophisticated evaluation metrics and benchmarks for assessing chatbot performance, moving beyond simple pattern matching to consider factors like coherence, relevance, and user satisfaction.

The Deep Learning Breakthrough

The 2010s witnessed a revolutionary transformation in AI chatbot capabilities with the emergence of deep learning and neural network architectures specifically designed for natural language processing.

Recurrent Neural Networks (RNNs)

RNNs represented a significant breakthrough by introducing memory into neural networks, enabling them to process sequences of text whilst maintaining context from earlier parts of the conversation. This capability was crucial for creating chatbots that could maintain coherent dialogue over multiple exchanges.

Long Short-Term Memory (LSTM) Networks

LSTM networks addressed the limitations of basic RNNs by solving the "vanishing gradient problem," enabling them to maintain context over much longer sequences. This advancement allowed chatbots to reference information from earlier in the conversation and maintain thematic coherence across extended interactions.

Attention Mechanisms

The introduction of attention mechanisms allowed neural networks to focus selectively on different parts of the input when generating responses. This capability enabled more nuanced understanding of context and improved the relevance of generated responses.

Transformer Architecture

The development of the transformer architecture in 2017 represented perhaps the most significant breakthrough in natural language processing since the field's inception. Transformers abandoned the sequential processing limitations of RNNs in favour of parallel processing with sophisticated attention mechanisms.

Key innovations of the transformer architecture include:

Self-Attention: The ability to relate different positions within a sequence to compute representations, enabling the model to understand complex relationships within text.

Parallel Processing: Unlike RNNs, transformers could process entire sequences simultaneously, dramatically improving training efficiency and enabling the use of much larger datasets.

Scalability: Transformer models could be scaled to enormous sizes, with billions of parameters, enabling them to capture increasingly sophisticated patterns in language.

The Era of Large Language Models

The late 2010s and early 2020s have been defined by the emergence of large language models (LLMs) that have transformed our understanding of what AI chatbots can achieve.

BERT (Bidirectional Encoder Representations from Transformers)

Google's BERT, introduced in 2018, demonstrated the power of bidirectional training, where the model learns to predict missing words by considering context from both directions. This approach significantly improved understanding of language nuance and context.

GPT Series (Generative Pre-trained Transformer)

OpenAI's GPT series, beginning with GPT-1 in 2018 and evolving through GPT-2, GPT-3, and GPT-4, has pushed the boundaries of what conversational AI can accomplish. These models, trained on vast amounts of text from the internet, demonstrate remarkable abilities in:

Few-Shot Learning: The ability to perform new tasks with minimal examples Creative Writing: Generating original poetry, stories, and creative content Code Generation: Writing functional computer programs based on natural language descriptions Reasoning: Solving complex problems through step-by-step logical thinking

Scale and Emergence

One of the most remarkable discoveries of the LLM era has been the phenomenon of emergent abilities - capabilities that appear suddenly as models reach certain scales. Abilities like few-shot learning, chain-of-thought reasoning, and creative problem-solving seem to emerge naturally from sufficiently large and well-trained models.

Modern AI Chatbots: Capabilities and Applications

Today's AI chatbots represent the culmination of decades of research and development, offering capabilities that would have seemed like science fiction just a few years ago.

Advanced Conversational Abilities

Modern chatbots can:

- Maintain context across long conversations

- Understand and respond to complex, multi-part questions

- Engage in creative and analytical thinking

- Adapt their communication style to different audiences

- Handle ambiguous or incomplete queries

Multimodal Capabilities

Recent developments have enabled chatbots to process and generate not just text, but also images, audio, and even video content. This multimodal capability opens up new possibilities for interaction and application.

Specialisation and Fine-Tuning

Modern chatbots can be fine-tuned for specific domains, industries, or use cases, combining general conversational ability with specialised knowledge and behaviour patterns.

Real-World Applications

Today's AI chatbots serve in numerous roles:

Customer Service: Handling routine enquiries, troubleshooting problems, and escalating complex issues to human agents

Education: Providing personalised tutoring, answering questions, and supporting learning across various subjects

Healthcare: Offering preliminary health information, appointment scheduling, and mental health support

Content Creation: Assisting with writing, editing, brainstorming, and creative projects

Programming: Helping developers write code, debug problems, and learn new technologies

Business Intelligence: Analysing data, generating reports, and providing insights for decision-making

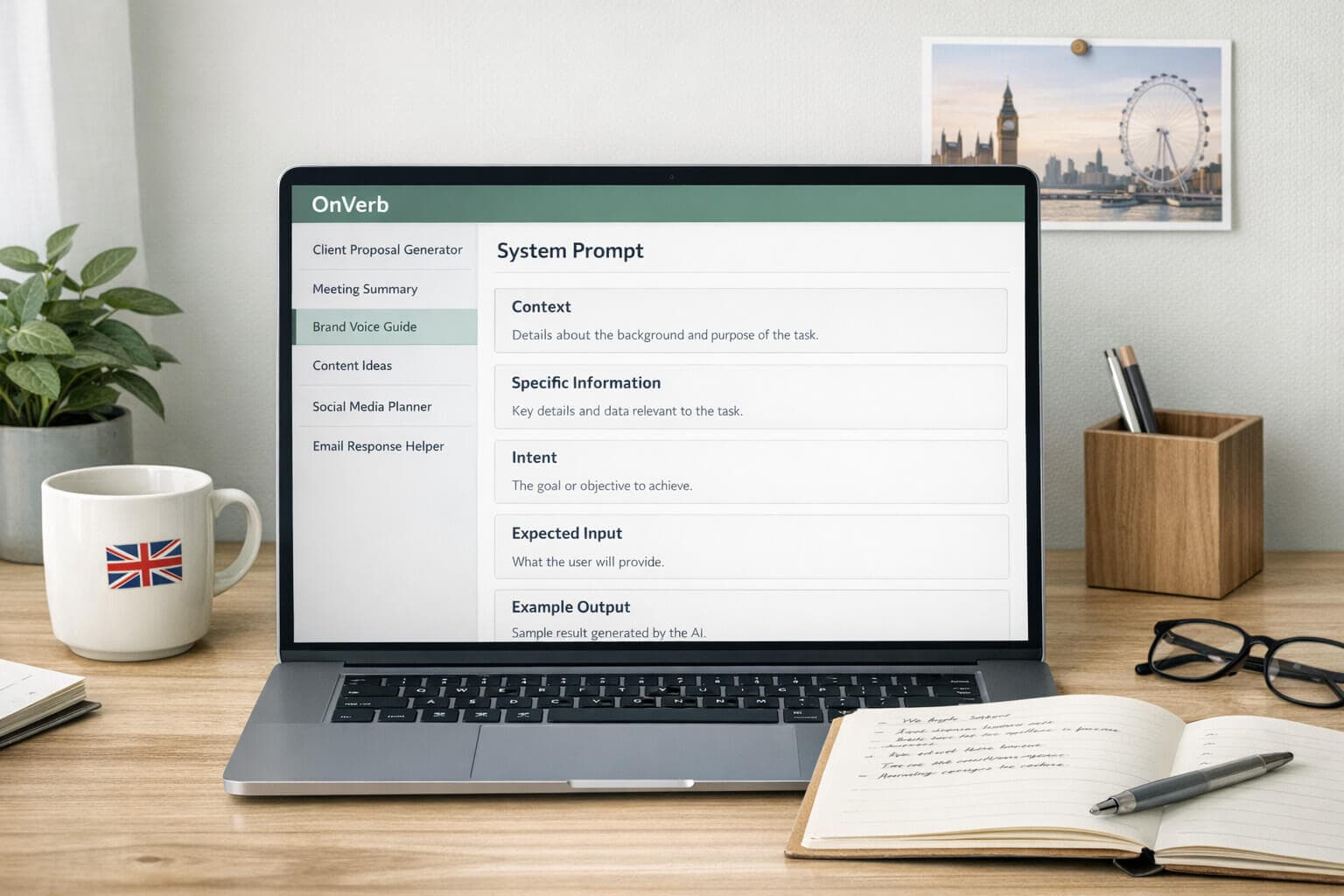

The OnVerb Revolution: Democratising Advanced AI

Platforms like OnVerb represent the next evolution in making advanced AI chatbot capabilities accessible to everyone. By providing sophisticated system prompt functionality and seamless integration with leading AI models, OnVerb enables users to harness the full power of modern conversational AI without requiring technical expertise.

OnVerb's approach addresses several key challenges in AI chatbot deployment:

Accessibility: Making advanced AI capabilities available to non-technical users through intuitive interfaces

Customisation: Enabling deep customisation through comprehensive system prompts without requiring programming knowledge

Integration: Seamlessly connecting with multiple AI models to provide the best tool for each specific task

Scalability: Supporting everything from individual use cases to enterprise-wide deployments

Challenges and Ethical Considerations

As AI chatbots become increasingly sophisticated, several important challenges and ethical considerations have emerged:

Bias and Fairness

AI chatbots can inadvertently perpetuate or amplify biases present in their training data, leading to unfair or discriminatory responses. Addressing these biases requires ongoing vigilance and sophisticated mitigation strategies.

Privacy and Data Security

The conversational nature of chatbots means they often handle sensitive personal information. Ensuring robust privacy protection and data security is crucial for maintaining user trust.

Transparency and Explainability

As chatbots become more sophisticated, understanding how they arrive at their responses becomes increasingly challenging. Developing methods for explaining AI decision-making is an active area of research.

Misinformation and Hallucination

AI chatbots can sometimes generate false or misleading information with confidence, a phenomenon known as "hallucination." Developing methods to detect and prevent such errors is crucial for reliable deployment.

Human Displacement

As chatbots become more capable, concerns arise about their potential to displace human workers. Balancing automation benefits with employment considerations requires careful thought and planning.

The Future Landscape: Emerging Trends and Possibilities

Looking ahead, several exciting trends are shaping the future of AI chatbots:

Increased Contextual Understanding

Future chatbots will likely demonstrate even more sophisticated understanding of context, including emotional context, cultural nuances, and implicit meanings.

Enhanced Personalisation

AI chatbots will become increasingly adept at adapting to individual users' preferences, communication styles, and needs, providing truly personalised experiences.

Multimodal Integration

The integration of text, speech, vision, and other modalities will create more natural and versatile interaction paradigms.

Collaborative Intelligence

Rather than replacing humans, future chatbots will likely focus on augmenting human capabilities, creating powerful human-AI collaborative systems.

Ethical AI Development

Continued focus on developing AI systems that are fair, transparent, and aligned with human values will be crucial for widespread adoption and trust.

Real-Time Learning

Future chatbots may be able to learn and adapt in real-time from their interactions, continuously improving their performance and understanding.

Conclusion: The Continuing Evolution

The journey from Alan Turing's theoretical musings to today's sophisticated AI chatbots represents one of the most remarkable achievements in the history of technology. Each milestone - from ELIZA's simple pattern matching to GPT-4's sophisticated reasoning - has built upon previous innovations whilst opening new possibilities for human-computer interaction.

Today's AI chatbots are not merely tools; they are collaborative partners capable of augmenting human intelligence and creativity in unprecedented ways. Platforms like OnVerb are democratising access to these powerful capabilities, enabling individuals and organisations to harness advanced AI without requiring deep technical expertise.

As we look to the future, the potential for AI chatbots to transform how we work, learn, create, and communicate appears boundless. The key to realising this potential lies in continued innovation balanced with careful attention to ethical considerations and human values.

The story of AI chatbots is far from over. Each new breakthrough brings us closer to Turing's vision of truly intelligent machines whilst opening up possibilities that even he might not have imagined. As we continue this remarkable journey, one thing remains certain: the conversation between humans and artificial intelligence has only just begun.

The evolution continues, and with platforms like OnVerb leading the way in making advanced AI accessible to everyone, we are all invited to participate in shaping the next chapter of this extraordinary story.

This comprehensive exploration of AI chatbot history was crafted using OnVerb's advanced system prompt capabilities, demonstrating how modern AI can synthesise complex historical narratives whilst maintaining accuracy, engagement, and insight throughout.